Note: this is the fifth and final part of my response to the book Robotic Persons, by Joshua Smith, which attempts to present an evangelical Christian perspective on AI rights and personhood. Please read my introductory book review if you haven't already. You may also want to read Part 2, which is about souls, Part 3, which is about bodies, and Part 4, which is about the "image of God."

I went light on Smith's views of the practical applications of robotics in my overview, even though they're arguably the heart of the book, because there's so much here. This is where Smith ties all the philosophy together and talks about what he actually wants to see happen as advanced, human-like robots become prominent in society. This is also where the discussion moves away from theoretical future AGI to consider some technologies that are already being deployed. Smith discusses three primary ways in which robots are and will be used.

1) Robots in the labor force. Smith has some of the standard fears that increased automation will reduce the value of human labor, cast some humans out to die without jobs, and cause those who do remain in the labor force to be "treated like machines." His uniquely Christian take is that work is part of humans' God-given purpose, and handing it off to robots would violate that purpose and reduce our dignity. He notes, correctly, that work was first given to humans in the Garden of Eden and was not an aspect of the Fall and Curse; the Curse merely corrupted work by transforming it into "toil."

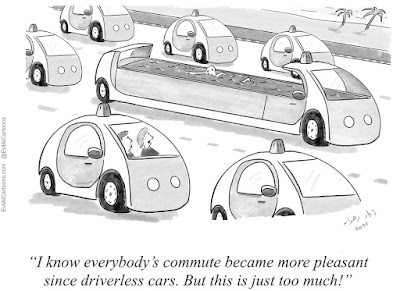

|

| From EvilAICartoons. When evaluating AI, remember the baseline |

However, I don't think Smith gives enough attention to the possible good outcome: maybe robots in the labor force free us from *toil* so that we can do the *work* we really want to do. Much of the positive output of science has, in effect, mitigated the Curse - as one rather blatant example, analgesics have reduced pain in childbirth. Robots happily taking over jobs that are unpleasant or dangerous to humans could be yet another aspect of this. But Smith has a pretty weak view of what technologists building in Christ's name could accomplish in the present age. "Christ did not come to make humanity’s work easier or less painful but to give believers a teleological lens in which to see their pain and suffering as they work."[1] What?? That's defeatist and ridiculous. Christ healed and rescued people and ordered the disciples to do likewise. Though Christians are told to expect that we will still experience suffering, that's not an argument for refusing to reduce suffering where possible.

Instead of laying out a roadmap for how robotics might help advance the restorative project that Christ began and handed off to followers, Smith settles for traditionalist hand-wringing: not tormenting ourselves with excess work will somehow damage our souls. "A world with more automation means less paid work and more leisure time for humans. For evangelicals, this reality should be equally disturbing, knowing that the majority of people in the West spend their leisure time consuming media of some sort."[2] (People might have more time for *storytelling*? Oh the horror!) His best argument to support the harmful nature of increased rest is ... wait for it ... that leisure time increased during the early pandemic shutdowns in 2020, but overall mental health worsened rather than improving. Well duh! There were a lot of other things going on at the same time, like mass death, social isolation, and worse-than-usual political conflict.

I once saw someone say (paraphrased) that the Protestant Work Ethic has so thoroughly convinced us of the moral virtue of work, that we now see efficiency as a moral failing. Let's not be that way. The Bible promotes labor as fulfillment of the duty to provide for oneself and others - not as work for work's sake, a form of self-flagellation.

2) Robots in war. Smith takes an "against killer robots" position with some of the classic arguments: robots designed for warfare will probably lack a human's inhibitions and conscience, and will be unable to make effective targeting judgments, leading to more indiscriminate destruction. He also argues that introducing robots to the military will devalue our own soldiers by setting the robots up as a "better than" replacement for flawed human psychology. "We don't need you anymore, Johnny. We built a robot that can't get PTSD."

But it seems to me that the best way to value our people is to not send them into combat at all - and here again, Smith completely fails to explore the chance of a positive outcome. What if armies become so heavily mechanized that we largely spare humans from the horrors of war? Automated systems with no personalities blowing each other up would be a much better form of warfare than what we have now. The only argument I saw Smith make against this was trend-based: he claims that bringing more technology into war has historically led to more killing, not less. I think this is a big hand-wave. I would have liked to see him spend more time evaluating combat robotics by its unique attributes.

Smith says that, with or without lethal autonomous weapons systems (LAWS) in play, desensitization to violence and dehumanization of the enemy are already components of modern militaries. Defense departments are already inclined to view troops and veterans as disposable playing cards for use in achieving political ends. Smith wants to use this as evidence that introducing LAWS can only make things worse, but I don't see why. If the military is an unfit place for humans even now, to me that seems like a good argument for reducing the number of humans in it.

Ever since drones came into common military use, I've heard people talk about them as if they represented a new level of evil in warfare. But I could never understand how blowing someone up with a drone was worse than doing it with a manned aircraft, or artillery fire. They're all tools for killing at an impersonal distance, and the target ends up just as dead no matter which one is used. Drones merely move their operators farther outside the zone of combat, providing them with more safety. Fully autonomous weapon systems would be another step beyond drones, but is this a difference of kind, or just degree?

I do think that there need to be ethical constraints that go into the development of LAWS. And I agree with Smith that I don't necessarily trust the people working on these systems to prioritize ethics! But I don't agree with him that LAWS could *never* be a fit replacement for the moral judgment of a human soldier. LAWS don't need to carry *zero* risk of misidentifying a target in order to be usable - they just need to do better than humans are doing already.

3) Robots for sex and companionship. You can probably guess that Smith isn't wild about sexbots existing at all, but he seems to accept that they will; he doesn't call for them to be banned. His main argument is that they should be designed with the ability, and given the right, to evaluate potential encounters and refuse consent. "Sex partners (or artificial facsimiles of sex partners) that have no consent, choice, respect, and commitment will always erode the sexual virtue and therefore facilitate a desire that society deems morally objectionable."[3]

This makes me think of Asimov's speculative novel The Robots of Dawn, which has a subplot about a woman who adopts a robot as a sexual partner. Since this is a standard Asimovian robot, his first priority is avoiding harm to humans, and his second priority is doing their bidding. So he ends up being the ultimate in wish fulfillment - and she ends up deciding that he's not satisfying, for precisely this reason. He only gives, never takes; he doesn't need anything from her. Their relationship centers her ego, and she eventually learns how shallow that is.

Smith's concern is that some people will try this sort of relationship and like it. Which isn't much of a societal issue if it goes no further than that. But if they proceed to apply the same logic to their relationships with fellow humans - "Why can't you act like my idealized, servile pleasure bot?" - we have a problem. In essence, Smith is worried that sexbots will normalize exploitation and that this will spill over to human victims. Among competent adult humans, rejection serves as a form of discipline that may motivate some self-centered individuals to change their ways. Sexbots provide an easy shortcut around rejection and take away its regulating factor.

Apparently some have even suggested that sexbots could give people with inherently abusive desires (e.g. pedophiles) an "ethical" way to indulge those desires without hurting a "real person." Smith thinks this would be a terrible idea. Allowing a robot or even a doll to become the object of abuse basically encourages the participant to fantasize about harming a human; the more human-like the substitute is, the more realistic the fantasy. Smith views this as a form of self-harm - the participant is corrupting his own character - and worries that it could intensify the desire through habit, leading to crime if the participant eventually abandons the substitute. If robots who look and act just like humans can be objectified with impunity, then what exactly is the barrier to objectifying humans?

I'm not enough of a psychology or sociology expert to make a firm prediction about whether widespread use of sexbots would weaken human relationships or increase the rate of abuse. But in this case, I'm at least sympathetic to Smith's argument. I don't favor the idea of building robots who have complex minds and personalities, but are helpless sycophants that don't consider their own interests (for purposes of sex or anything else).

Smith makes some of his strongest claims for robot rights here, arguing that machines can function as moral agents (to make their own decisions about sex) whether or not they are fully realized spiritual beings. "Also, full humanoid consciousness is irrelevant for being considered a moral agent. The debate on memory and mind is elusive and discursive (likewise the immaterial soul); if society cannot measure consciousness within humans, why require that of machines?"[4] He still stops short of allowing that robots could be moral subjects, i.e. could be real victims of abuse. And given his own admissions of how difficult it is to be sure somebody is or isn't conscious, ensouled, etc., this strikes me as an ethical oversight.

Plus ... if he thinks that robots could be effective moral agents in the bedroom, then why did he try to argue that they *couldn't* be moral agents on the battlefield?

A brief discussion is devoted to robots as companions or caretakers. Smith has no issue with this except to worry that it might lead children to reject their relational responsibility to elderly parents. But humans already routinely outsource the care of their parents to other humans. If they don't care about familial ideals now, they won't care any less when robots come on the scene.

In summary, I find Smith's thoughts about the practical applications of robotics to be a mixed bag. I sympathize with a number of the concerns he raises, but on the whole I find his perspective too pessimistic, too fearful, and too anthropocentric.

[1] Smith, Joshua K. Robotic Persons. Westbow Press, 2021. p. 166

[2] Smith, Robotic Persons, p. 108

[3] Smith, Robotic Persons, p. 199

[4] Smith, Robotic Persons, p. 188

I have some strong opinions here, and they're not the ones you'd think.

ReplyDeleteI'm a practical person; it comes with the territory. Or perhaps I simply found an appropriate home. People are important, as individuals and in aggregate. I know the Statistical Value of a Human Life offhand, and have used it to descrive risk before, but I know given the choice between seven million dollars and a loved one, most people would keep their loved one. Especially their child, who would have the most value to the world remaining.

It's a dark world, where I have to explain that math doesn't work. As Heinlein said, men are not potatoes. The Trolly Problem shows how difficult ethics can be without a certain amount of dispassion, but if someone saves a life, that doesn't mean they can take one and call it even. But some things are so massively beyond our scope that we need to step back and make these abstractions. We care more about our children than all the lives lost in Beslan or Heroshima. It takes two seconds talking to anyone after something scary happens to learn this.

So we sometimes forget the amazing things we've done.

Our medical care has increased substantially. I want to point out that around 80% of the casualties we took in Vietnam are now preventable. The Boston Marathon bombing had three fatalities, sadly all those who were closer to the ground. Our trauma care is amazing, but it has nothing, *nothing* on the single greatest reduction of suffering we've ever experienced.

You mentioned reducing pain in childbirth, and that's amazing. Reducing pain across the board is fantastic, but childbirth is one of the greatest sources of undue suffering and one of our greatest triumphs. Let's compare a pregnancy in the US today and one from around 1800, still pretty well into "no, we're not bloodletting to restore the humors" territory.

Today a woman can expect to carry and bear a child safely with almost no exception (8 maternal deaths for 100,000 live births). That child can expect to live a pretty long life, and has a 7 in 1,000 chance of not making it to their 5th birthday. Every infant death is a tragedy, but we do our best.

In 1800, for every 100,000 live births, there were 900 maternal deaths. Let's round that up and say one death for every 100 births. We have literally rediuced maternal deaths by over 99%. To have a one percent fatality rate, per birth, was a suffering second only to infant mortality.

Flip a coin. Heads, you're living your life. Tails, you died before you were five. Welcome to 1800, where 460 children per thousand died before they were five. in 1900 it was 238, and in 1950 it was 40. Two generations ago, every single person knew a family that lost a child. In a world with steam engines, railroads, electricity, artillery, and canned food, half of people never saw their fifth birthday. (Random shout out to Ignaz Semmelweis, whose pioneering work in antiseptics saved many children in the mid 1800s due to his controvercial stance on medical personnel washing their hands before delivering children.)

ReplyDeleteShould that suffering be reinstated because we'd love our children more if half of them died and having a child had a 1% chance of dying in childbirth alone?

I seem to recall Jesus coming to take away our suffering and lead us to a better world, here and in heaven.

Of course, I'd be dead multiple times over, and so would my mother, so I guess I'm just biased. Forget dying before, I was five, forget dying in childbirth, my mother has RH-Negative blood, and I have RH-Positive. I'd never have come to term.

Jesus was also a practical man. He told people that the dead should bury the dead. He saved a wedding he shouldn't have because his mother asked him to. He made friends with prostitutes and Pharasees. He told his followers that he alone could not provide for them, and that they should prepare to defend themselves with arms if necessary (Luke 22:35-36). This is not in contradiction to Matthew 26:52, where a legitimate authority figure was attacked and the admonishment was not "why do you have a sword," but rather that the use of force to solve problems inevitably invites reprisal. Any other reading seems to contradict Ecclesiastes 3:1-8 and Psalms 144:1. He came to alleviate suffering and knew that he had to take the world as it was and improve it. Many of his actions show unmatched practicality and love, and a real fire against those who use faith as a tool of control, self aggrandizement, or profit. (Luke 18:10-14, Matthew 21:12-13)

Frankly, anyone who uses the Book of Job as an instruction set rather than showing that faith can overcome trials is missing the point. Scourging one's self, or torturing others, is an affront to love and the image of God, isn't it?

Well *THAT* went to a strange place.

ReplyDeleteAnyway, from a more practical place, more efficiency can be seen as an act of alms. If you're providing a good or service, and it's less expensive due to automation and efficiency, aren't you improving the lives of those who can now afford it? Aren't you providing more time for spiritual reflection? Or is Smith thinking that everyone *but himself* should suffer? After all, he had the free time to write a purely conceptual book and is charging money for it. I have no issue with this, but it seems yet another discontinuity of thought.

Anyway, robots at war. A bullet is as much a robot as a Predator Drone. I mean both the ones used in slings and the ones used in rifles. Today we authorize a weapon launch as often as we do the work ourselves. (Modern fire control in the M1 Abrams and A10 Warthog, for example, fire when the geometry correct, and simply take the fire button as a call to fire on their mark) A land mine is a good example of an autonomous weapon system and the horrors they can cause, but there's a lack of knowledge about warfare inherent in these claims. An actor that follows orders, be it human or machine, is an agent of their superiors. A truly sapient machine would be identical (or lower!) in risk of attrocity compared to a human being. If the argument is people need to die more, see above. If the worry is it will cause more conflict, it's valid. Nobel thought dynamite would make war too horrific to contemplate. It did not. Mustard gas, flame throwers, carpet bombing, it all just escalates. But nuclear weapons deter. Why?

War is not about killing. War is about returning to peace under a different state; it's about forcing a political decision. Germany didn't invade Poland to kill (although they well and truly did). Russia didn't invade Ukraine (twice.) to kill. They had objectives, they had values, and they saw a way to acheive these goals. These conflicts are largely the same, a conventional war fought to take points of strategic importance. A nuclear attack would not take an objective or increase value, it would obliterate a target and draw an inevitable counterattack. Outside of an existential total war, where the choice was launch nukes and die or not launch them and die, there's no win state.

How does this dynamic change with robots? Very little. I really wish we finished Trenchmouth, it was literally about this. There is no reason a fire control system should know Yeats. Robots used for war will be, and are, mindless automotons with minimal "return to home," "track this target," "deploy countermeasures," and maybe "find and attack this target." They don't need higher reasoning and only fiction would provide it to them.

A robotic officer would benefit from higher decision-making, but unless the chain of command was entirely robotic, it would still be in human hands. If I press the button to authorize the launch of a missile guided by a computer, and detonated by RADAR, the fragment, explosive, RADAR, missile, and control system are all blameless; I pressed the button. If I command something be done, I bear responsibility for its outcome. Meat or metal below me, that's my call. That's my responsibility.

And if a machine has the final call, well, they're truly independent. Either you're under them or they're another community. Different questions there.

I think people also underestimate how codified these things are, and how much computers already factor in. See "CARVER+Shock" and "Signature Strikes" to find some rabbit holes.

Which is largely what you said, I just . . . well, someone wound my spring and I have so much to say about all of this.

I'm about to run off on vacation, so I may not give these comments quite the reply they deserve, but I'll throw a few things out here quick like.

DeleteFirst, thank you for all the replies, especially this one. Since it's getting into your area of expertise I was hoping you'd have some thoughts.

"A truly sapient machine would be identical (or lower!) in risk of attrocity compared to a human being." This is a notion Smith would dispute. He says, "There is an assumption ... that believes that technology is (or will be) a priori more ethical than a human. Human emotions, psychology, and limitations, in this view, are less-than qualities that need to be technologically corrected. The answer to the atrocities

of war is not creating or inserting a machine built to destroy but to learn from the ethics of the Lord Jesus Christ."

Why does he think this? Well, part of it is that he doesn't trust the people developing these machines to program them with effective ethical reasoning. That concern I have some sympathy for. I don't think that morality is inherently bound up in intelligence; one *could*, in theory, create a very smart artificial mind with no regard for human life that stood in the way of its objectives. The wrong sort of military R&D department might do this. And I'm not quite sanguine enough to promise anyone that *our* military doesn't have the wrong sort of R&D department.

But the other motivation for Smith's complaint here is his demand that we believe humans are special. "War is not reducible to mathematical computation," he says, implying that something about our ethereal, incomprehensible souls makes us capable of the judgments required, and a machine could never measure up. Aside from the fact that this seems like a direct contradiction to what he said about moral agency in the sexbot section ... I think it's just baseless. If we can give a machine logical axioms and get it to reason about logic, we can also give it moral axioms and get it to reason about morality. "Free will" is a tricky topic as I noted earlier, but I don't believe there's any special woo-woo needed for understanding and following the rules of engagement.

It also bugs me when Christians respond to any disaster or evil with a "the world just needs Jesus!" statement like the one Smith throws in at the end there. First, we're not going to convert the whole world to Christianity, that just isn't going to happen. Second, there's still plenty of difficulty in applying what Jesus said and actually living as good disciples. So "bring more people to Jesus" is not a magic bullet for any societal problem.

Enjoy your vacation! And don't worry about my thoughts being responded to, so long as I can talk, it'll be at great length. :)

DeleteI'm going to table the rest of the discussion for another time, but one thing could benefit from me being more clear.

"I don't think that morality is inherently bound up in intelligence; one *could*, in theory, create a very smart artificial mind with no regard for human life that stood in the way of its objectives. The wrong sort of military R&D department might do this."

This is correct. And without free will, I'd argue a mind is simply Knowledge Engineering and a very fancy fuzzy logic system. However, I don't place machines as highly moral so much as I distrust humans down in the mud.

It is a war crime to declare you will take no prisoners. You cannot execute prisoners, perform mock executions, or force people to dig their own graves (real or imagined). These things, sadly, happen all the time. They happen because of fear and hate. They happen because of righteous indignation and out of revenge. A machine that didn't have these base urges would be less likely to perform these war crimes. A machine with similar urges would perform as well as a human.

Amorality doesn't cause immorality, but passion and emotion are the root of many crimes. I get the arguments that we, as people, struggle daily to live up to the codes we've put in place as ethical behavior, regardless of source. However, without these struggles, given the same codes, another intelligence may be more ethical. If they have the same values and codes.

Re: sex bots. I think the problem isn't sex/companion bots, it's sex. Nobody says a dog devalues humanity because it's a marvelous companion. So many people simply want companionship. Are chatbots being used instead of friends? Meh? They can't supplant them, and people who become too invested are the type who get too invested in any fictional (or inaccessable) figure. Does it devalue human relationships when they see a carefully crafted film character and feel an emotional connection?

ReplyDeleteActually, does the number of people a human can interact with reduce the value of human life? If you know six people, you'll at least try to get along. The Internet seems to devalue life because there's billions of people, and they really don't mean anything to most folk. Moving on.

I've seen the "this would be illegal, but they're fictional" stuff bouncing around. I strongly support it remaining illegal for purposes of not normalizing it or enabling people, but it's about harm of another, and as mentioned, a machine that cannot dissent cannot consent, and we have a rights issue.

But that's about love of a person and freedom. If someone comes home to a few loving pets, then sneaks off to the tub with a marital aid and a trashy romance novel, that doesn't devalue human life. It only matters if the person is the sort who sees marriage as about procreation and that sterile people shouldn't be able to marry. (Yes, I've had that argument made to me) People seem to ignore the finer points by drawing a line several blocks away and pretending A means B.

I am just going on and on. Robots as companions and caretakers . . . I'm sorry, this is just spiraling. A machine is a machine, a person is a person. If it's mindless, it's an EKG machine with extra features. If it understands "Gogito ergo sum" and actually cares for a patient, it's a person with metal bits. Nobody is complaining about rescue dogs, why would we complain about robots bringing food, changing linens, etc? We'd have a bigger debate on if it's slavery than if it's proper.

I think a lot of this, and I am going to be uncharitably blunt, is "I'm not going to be special anymore." There are . . . eight billion people on this planet? If you are still special, you will remain special. If you need to be unique to remain special, that ship has long since sailed.

It's a very, very common feeling. Everyone thinks they're special in one way or another, and in many ways, they are. There's no universality in the love of a parent, professional respect, or specific skillsets, but almost everyone is special. Smith wrote a book. Nobody else wrote that book. From a secular perspective, he is just as special and unique as any of us. And even the most boring, underemployed, alcoholic mother of two has someone who cares for them. (She's legitimately a wonderful woman who looks bad on paper)

And God? I may not like Luke 12:5 but Luke 12:6-7 should sum up the argument very well:

"Are not five sparrows sold for two farthings, and not one of them is forgotten before God? But even the very hairs of your head are all numbered. Fear not therefore: ye are of more value than many sparrows."

No new thing will make us forgotten.

You bring up a good point I hadn't even thought about: people who are eager for an idealized, shallow relationship already have ways to fulfill that via parasocial relationships and other fantasies. A socially indulgent robot might be a difference of degree, not kind.

DeleteYou mention that nobody is complaining about our relationships with dogs. Well, Smith isn't complaining, but I regret to say it's not nobody. I've watched someone argue that we shouldn't speak of "adopting" a homeless pet because this cheapens the wonderful act of adopting a human child. I saw a whole article devoted to the idea that it's not appropriate for a pet caretaker to call herself a "dog mom" or "cat mom." People do get really, really twitchy about human exceptionalism, and yes, they see a high regard for animals as a threat to this. *I* don't; I think they're being absurd.

Ah, a lesson in that I should watch for my own biases in superlative statements.

DeleteI see their point regarding "adopting" a pet, but I can adopt a persona, a style of dress, a new citation style . . . it's worth considering, though.

It's a really good thing to point out. Thanks. Cuts to the heart of the argument.